Dear Phil: A letter to a colleague about Design Thinking and L&D

Dear Phil:

Always great to hear from you, and I’m sharing some of my thoughts and findings from the Design Thinking MOOC I tackled a little while back. I’m glad you asked me to do this, and I hope you don’t object to the approach, but I figure this “letter” will let me get into a little more detail and spark a some Q&A.

So, here goes.

As you know, particularly in our time on that joint ID project in 2011, I’m a big fan of rapid, and flexible, processes. Yeah, I know that I was a little platform focused at the time, but I really wanted to get away from that slow, deliberate, linear design process that just doesn’t play nicely with the complexities of online asset development. The rapid prototyping model I espoused was, on reflection, good for sparking some thoughts about different ways to do things, and to explain a new approach. However, I admit it was a little light on the details of exactly how to make it work in practice.

Enter Design Thinking.

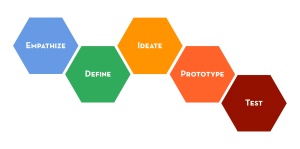

This deceptively simple process is the basis for my new vision on L&D problem solving. When we think about it, Phil, that’s really what we tend do to as IDs: we solve problems. Sometimes we solve big ones, sometimes, we have to tackle a whole bunch of small ones to get past a roadblock.

Here’s what I really like about it. First, it’s a people-centric process and it starts with Empathy. Sure, that’s not a stretch for us as L&D types, but it’s important that we understand the people involved and exactly who we are designing for. Next, it forces us to actually define the problem that needs to be solved (this Business Insider article calls this “defining the challenges“). So, in some sense, we can treat this like a needs assessment, but this process could be embedded within a larger ID task. So if we are trying to figure out the best kind of activity for learning a performance-based task, we could use this process to get lots of ideas out, focus on a couple for prototyping, and then test them. The other big part that I like is that we learn it’s OK to try different ideas and see how they work long before we get into hard-core development of a solution. That traditional, linear process we tend to follow is predicated on the idea that we are working one solution on the hopes that it is the right one, and only after fully implementing it do we consider revisions. In Design Thinking, we get to explore lots of different ideas, and there’s even some suggestions for how we could come up with them. For example:

- What are the most obvious solutions for this problem? (even things that you know already exist)

- What can you add, remove or modify from those initial solutions?

- How would a 5-year-old child solve the problem?

- How would you solve the problem if you had an unlimited budget?

- How would you solve the problem without spending any money?

- How would you solve this problem if you had control over the laws of nature (think invisibility, teleportation, etc.)?

What you’re probably seeing, as I did, is that it’s not about the rapid production of content (although that’s helpful if you can save time/money where appropriate) but it’s about moving a little faster and being more agile in the ideas, prototypes, and testing department. Where possible, it’s also about changing the mindset of our stakeholders about what Instructional Design is and what goes into the process. I also love that Design Thinking lets us take a different approach to user/stakeholder feedback.

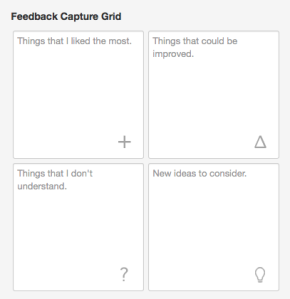

In this case, it’s less about QA of content and proofreading (still important) but it give us the ability to guide the feedback into contexts that make sense to us (while pushing our stakeholder(s) to put context into their commentary).

I really, really wish we had used something like this on our project because I think we could have asked some better questions along the way. If nothing else comes to fruition from the MOOC, I plan to use this grid for future feedback activities. I will also promote its use (or basic principles) among my colleagues.

The other sneaky thing I like about the DT approach is that it forces the ID to step outside their own preconceived notions of a solution. You know how much energy we spend trying to sell people on our interpretation of their needs, and sometimes it’s still met with a collective, “meh“. This is where Ideate comes in. Once we have that empathic sense, and we have defined the problem, we can start looking at ideas. Lots of ideas. Crazy ideas. You know, the proverbial “throw it against the wall and see what sticks” kind of thing.

Where we did succeed on our project – albeit with a different model – was on the concepts of Prototyping and Testing. I think this where you and I found some serious alignment because you could see without prompting the value of putting a couple of prototypes for the learning solution together, hashing them out, and refining them until we had something we knew could satisfy the learning objectives but would also provide a reasonably engaging learning experience. With Design Thinking, I look at Prototyping as an extension of the Ideate process, and I come up with a number of different looks, feels, and features to explore. Given our experiences with a cloud-based platform, it was easier for us to think about lifecycle and maintenance issues, and they were easy to factor into the framework of the prototypes.

While Design Thinking won’t always get the users enthusiastic about making time for testing, it does help because we are capturing a lot more than just QC glitches. It’s not that QC isn’t important, it’s just that we can manage the bigger-picture user experience issues through feedback and let the QA folks handle the typos and other things that might occur when we get into full-blown development of the solution. The approach here makes the user think about the four questions in a more positive and helpful frame of mind. The other thing I really, really like about using this method is that we aren’t stuck using Likert-style rating scales as the basis for our feedback. Because the users are so integrated into the Design Thinking process, we can use their comments and statements as the basis for deeper questioning and exploration of their reactions to the various prototypes. It’s also a way of closing the loop on their initial thoughts and feelings that we captured when defining the original problem. It’s so organic and so instinctual that it just makes sense to leverage this framework for ID.

It may seem a little cliche, Phil, but I think there’s a bright road ahead for us in Instructional Design from adopting new ideas like Design Thinking. A growing number of practitioners and experts in my PLN are seeing it as a nearly ideal blend of agility, flexibility, and user-centric thinking. I’ve found that it minimizes some of the temptations to skip things like analysis because Empathy is so critical to Problem Definition. Next time we chat, I’ll share some of the behind-the-scenes stuff I’ve been doing so you can see some of the Design Thinking for ID in action.

Stay in touch. Best to you & yours,

-Mark

Posted on January 24, 2015, in chat2lrn, Letters to Colleagues, MOOC, Uncategorized and tagged branding, critical thinking, design thinking, letter, pln, sharing. Bookmark the permalink. 1 Comment.

Reblogged this on chat2lrn and commented:

Mark Sheppard is the Training Coordinator for Northern Lights Canada in Oshawa, ON. Learn more about Mark at http://about.me/marksheppard